Sampling from diffusion probabilistic models (DPMs) is often expensive for high-quality image generation and typically requires many steps with a large model. In this paper, we introduce sampling Trajectory Stitching (T-Stitch), a simple yet efficient technique to improve the sampling efficiency with little or no generation degradation.

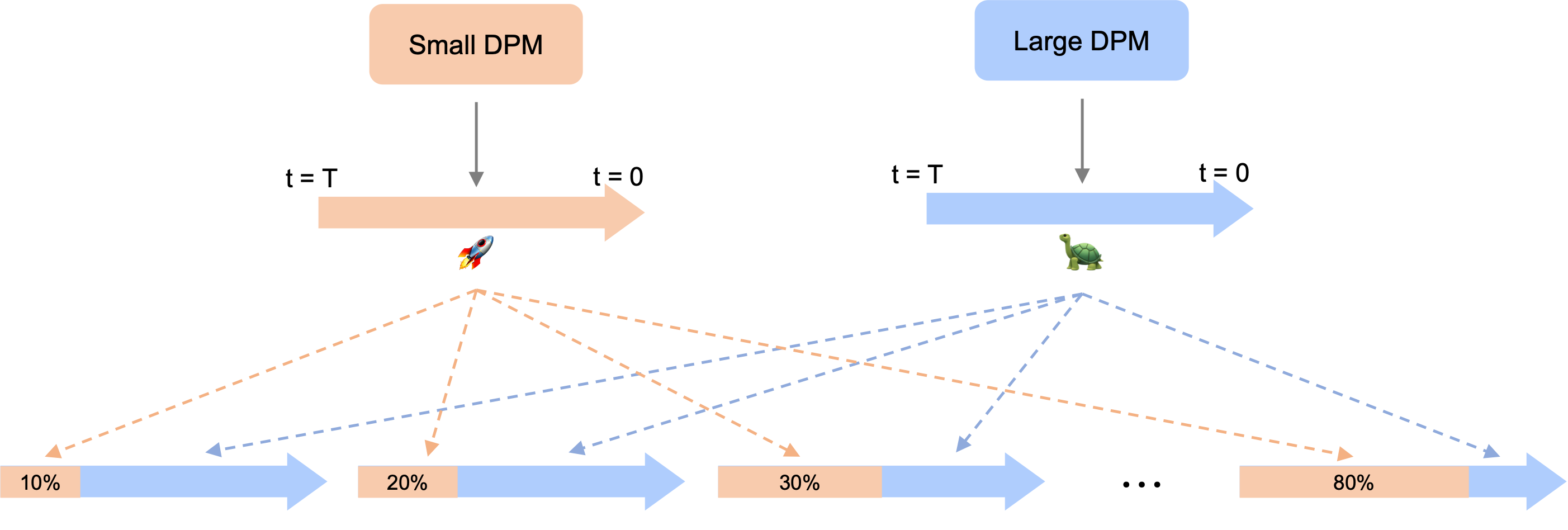

Instead of solely using a large DPM for the entire sampling trajectory, T-Stitch first leverages a smaller DPM in the initial steps as a cheap drop-in replacement of the larger DPM and switches to the larger DPM at a later stage. Our key insight is that different diffusion models learn similar encodings under the same training data distribution and smaller models are capable of generating good global structures in the early steps.

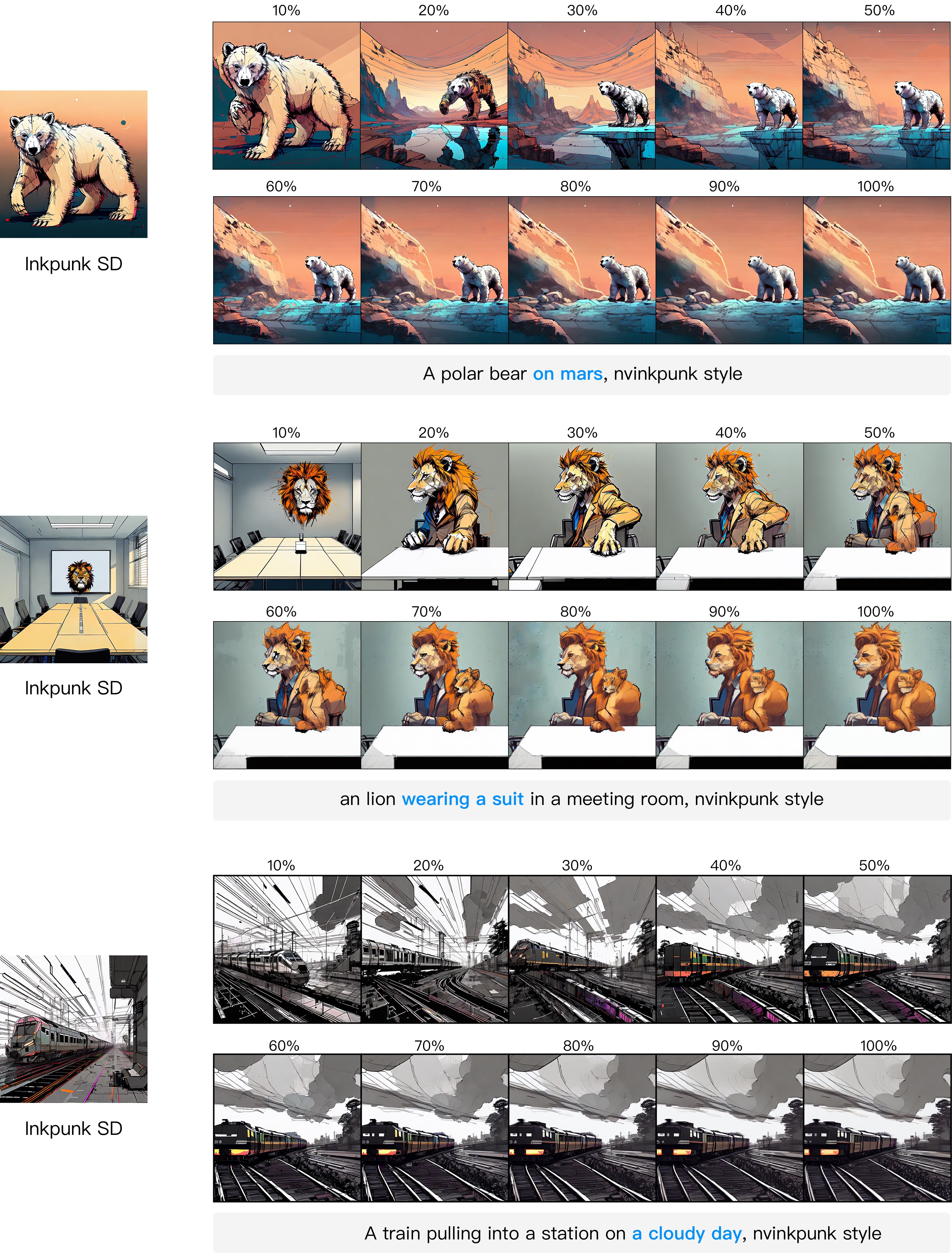

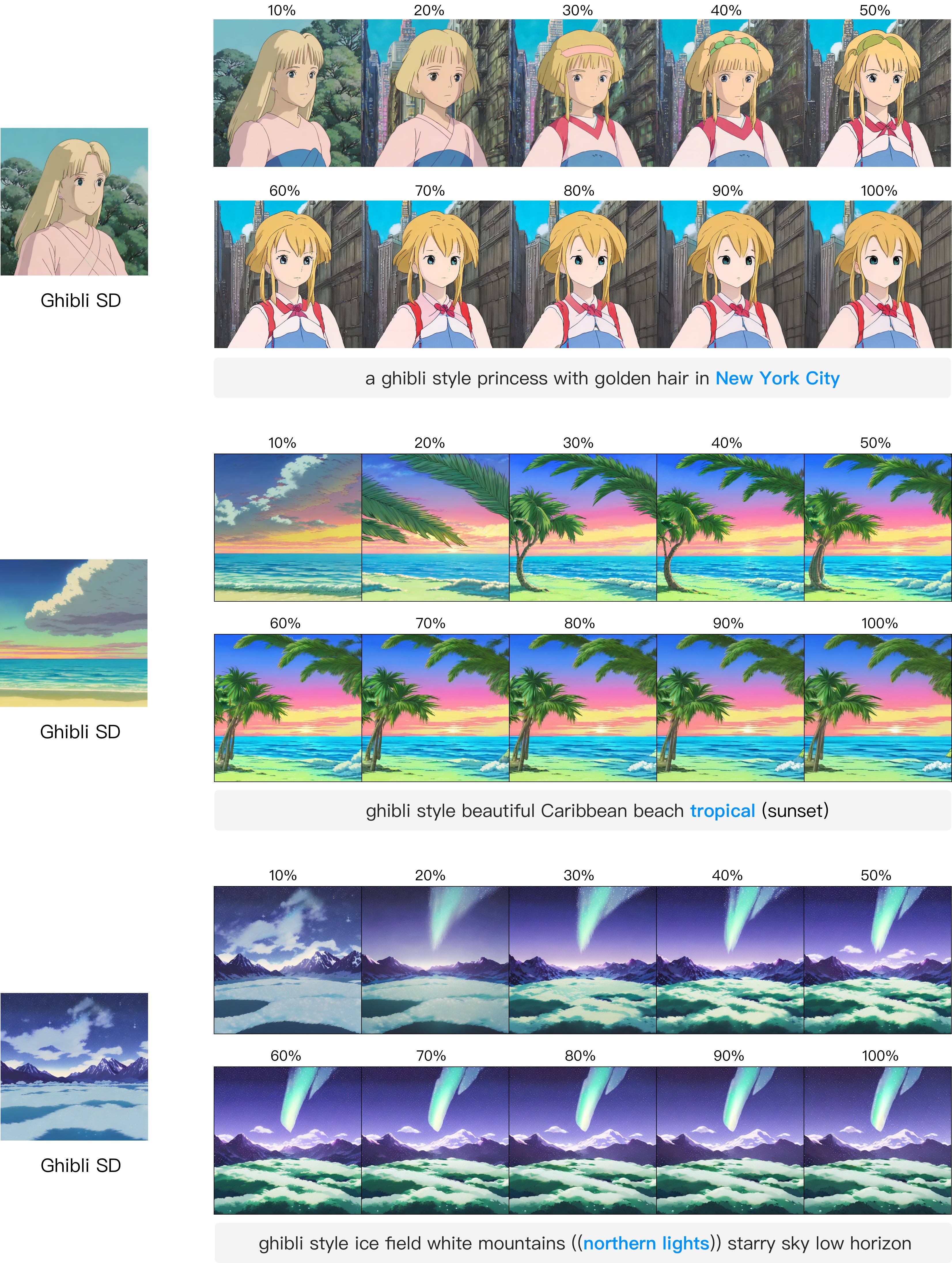

Extensive experiments demonstrate that T-Stitch is training-free, generally applicable for different architectures, and complements most existing fast sampling techniques with flexible speed and quality trade-offs. On DiT-XL, for example, 40% of the early timesteps can be safely replaced with a 10x faster DiT-S without performance drop on class-conditional ImageNet generation. We further show that our method can also be used as a drop-in technique to not only accelerate the popular pretrained stable diffusion (SD) models but also improve the prompt alignment of stylized SD models from the public model zoo.

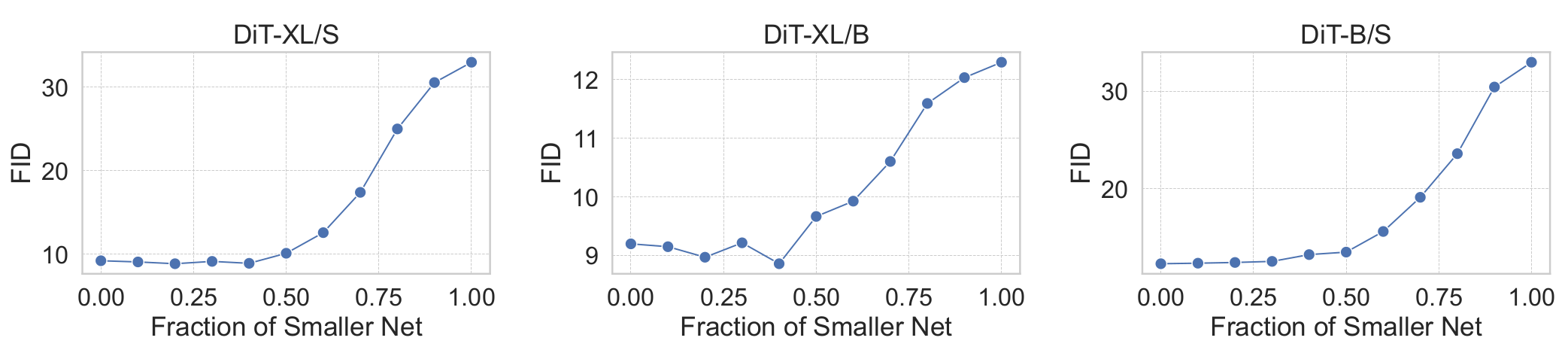

T-Stitch of two model combinations: DiT-XL/S, DiT-XL/B and DiT-B/S. We adopt DDIM 100 timesteps with a classifier-free guidance scale of 1.5.

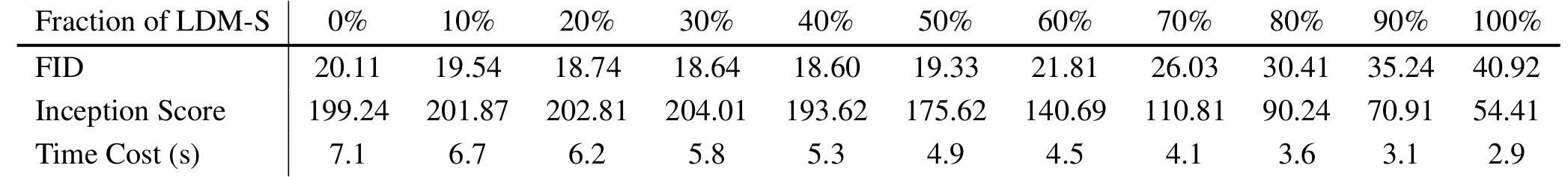

T-Stitch with U-Net based LDM (Rombach et al., 2022) and LDM-S on class-conditional ImageNet. All evaluations are based on DDIM and 100 timesteps. We adopt a classifier-free guidance scale of 3.0. The time cost is measured by generating 8 images on one RTX 3090.

T-Stitch with DiT-S and U-ViT H (Bao et al., 2023), under DPM-Solver++, 50 steps, guidance scale of 1.5. The time cost is measured by generating 8 images on one RTX 3090.

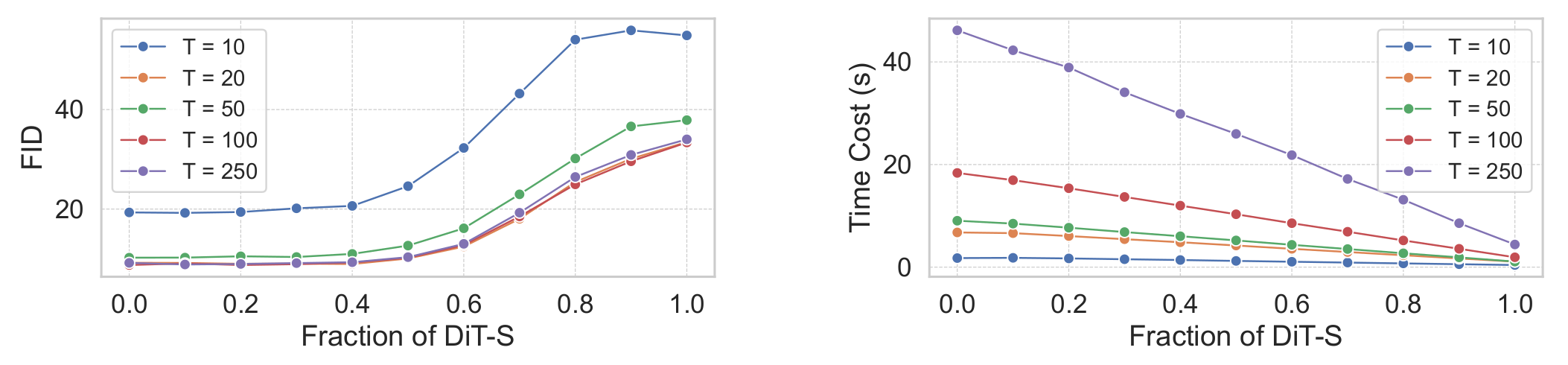

Left: we compare FID between different numbers of steps. Right: We visualize the time cost of generating 8 images under different number of steps, based on DDIM and a classifier- guidance scale of 1.5. “T” denotes the number of sampling steps.

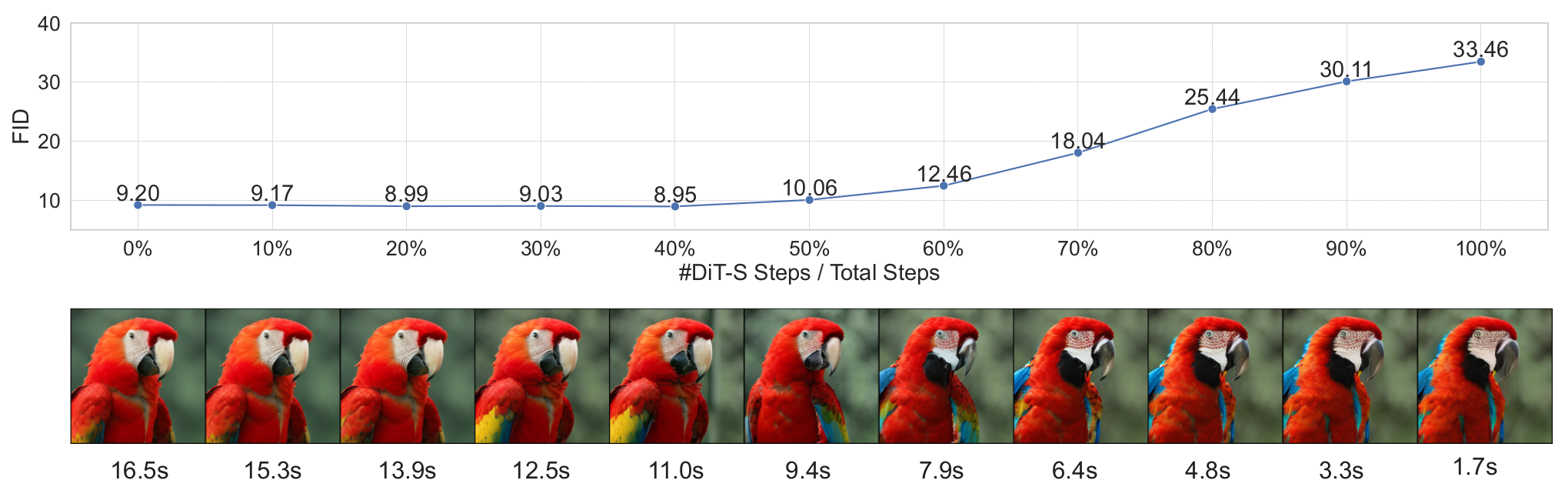

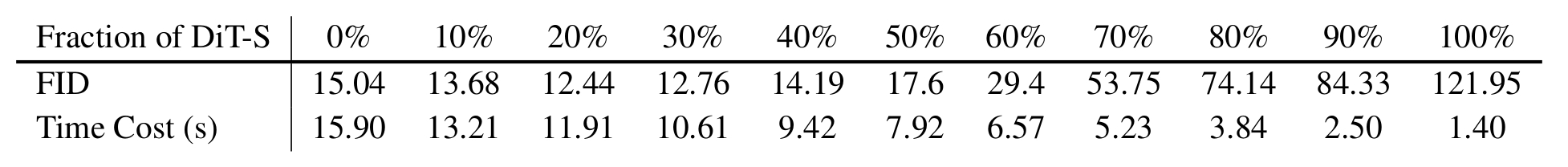

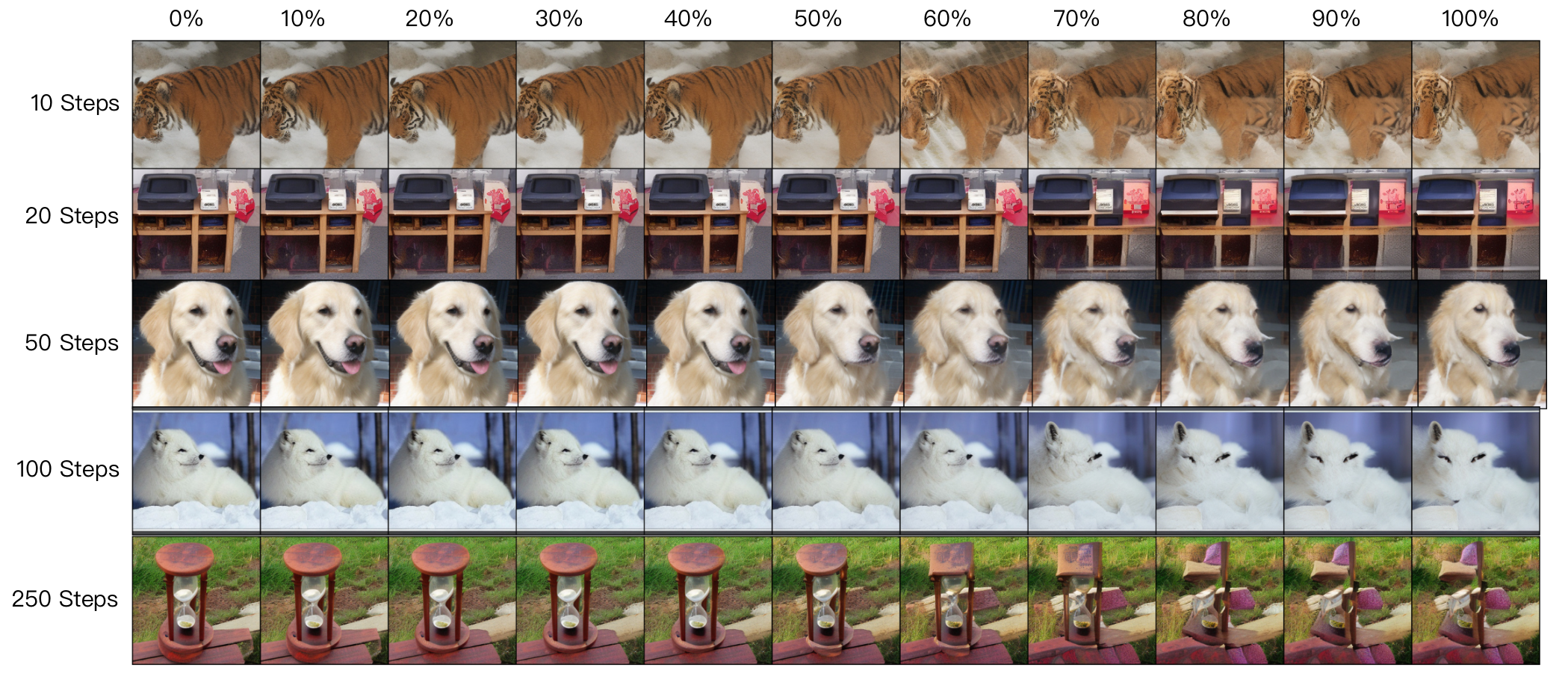

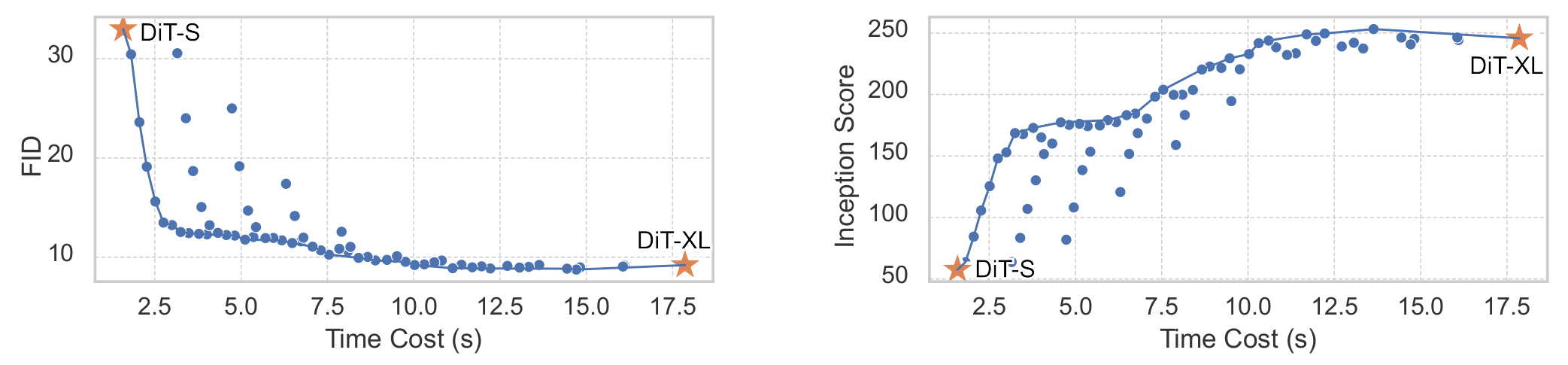

Based on DDIM and a classifier-free guidance scale of 1.5, we stitch the trajectories from DiT-S and DiT-XL and progressively increase the fraction (%) of DiT-S timesteps at the beginning.

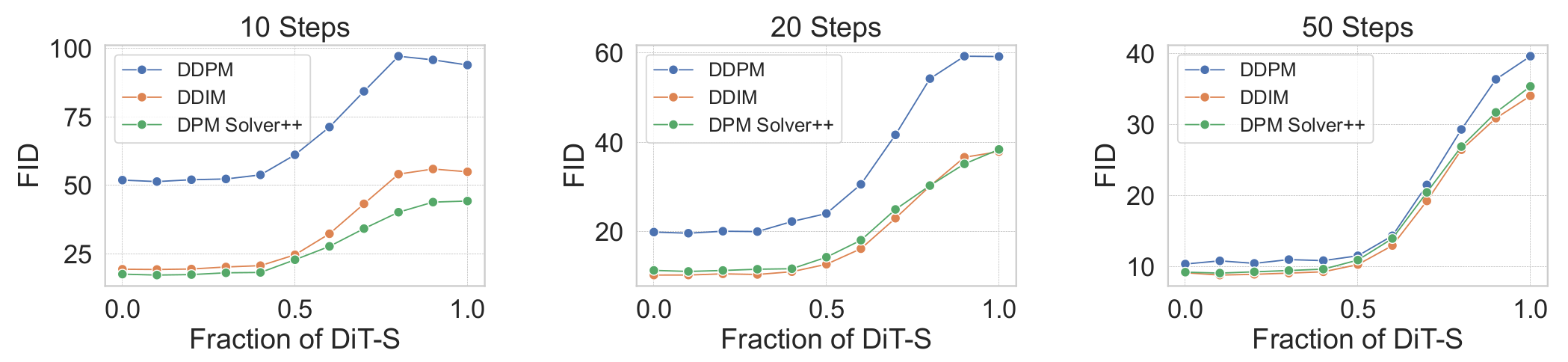

Effect of T-Stitch with different samplers, under guidance scale of 1.5.

T-Stitch based on three models: DiT-S, DiT-B and DiT-XL. We adopt DDIM 100 timesteps with a classifier-free guidance scale of 1.5. We highlight the Pareto frontier in lines.

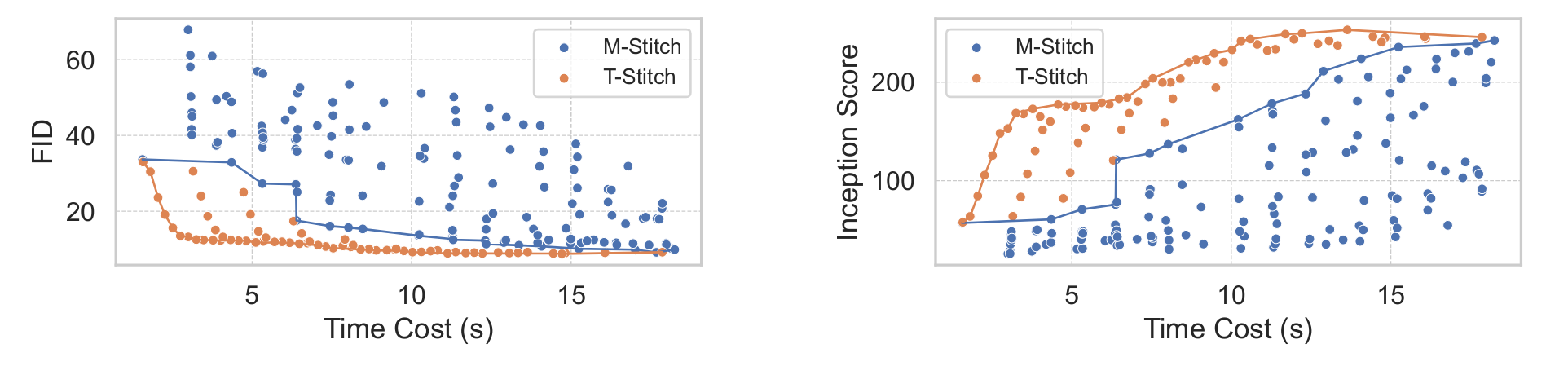

T-Stitch vs. model stitching (Pan et al., 2023) based on DiTs and DDIM 100 steps, with a classifier-free guidance scale of 1.5.

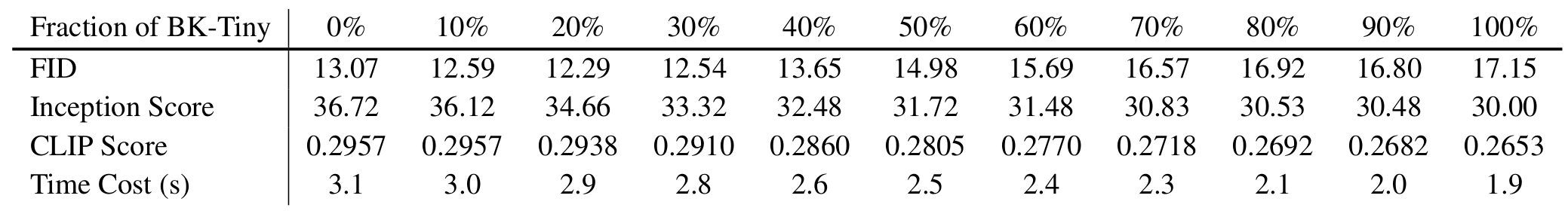

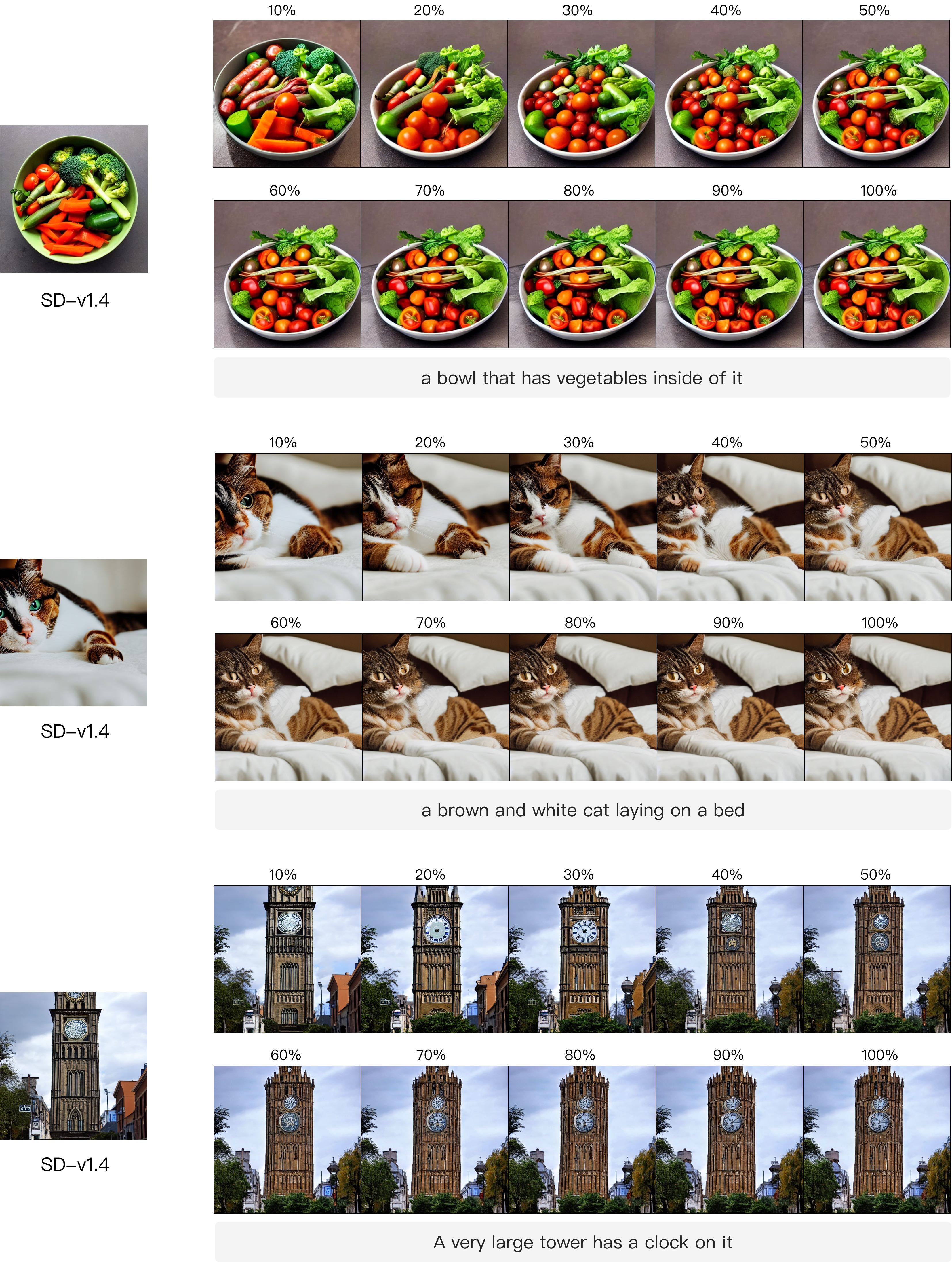

T-Stitch with BK-Tiny (Kim et al., 2023) and SD v1.4. We report FID, Inception Score (IS) and CLIP score (Hessel et al., 2021) on MS-COCO 256x256 benchmark. The time cost is measured by generating one image on one RTX 3090.

"a ghibli style princess with golden hair in New York City"

@article{pan2024tstitch,

author={Zizheng Pan and Bohan Zhuang and De-An Huang and Weili Nie and Zhiding Yu and Chaowei Xiao and Jianfei Cai and Anima Anandkumar},

title = {T-Stitch: Accelerating Sampling in Pre-trained Diffusion Models with Trajectory Stitching},

journal = {arXiv},

year = {2024},

}